Understanding Lakehouse Compaction

Why Tiny Files Break Big Data

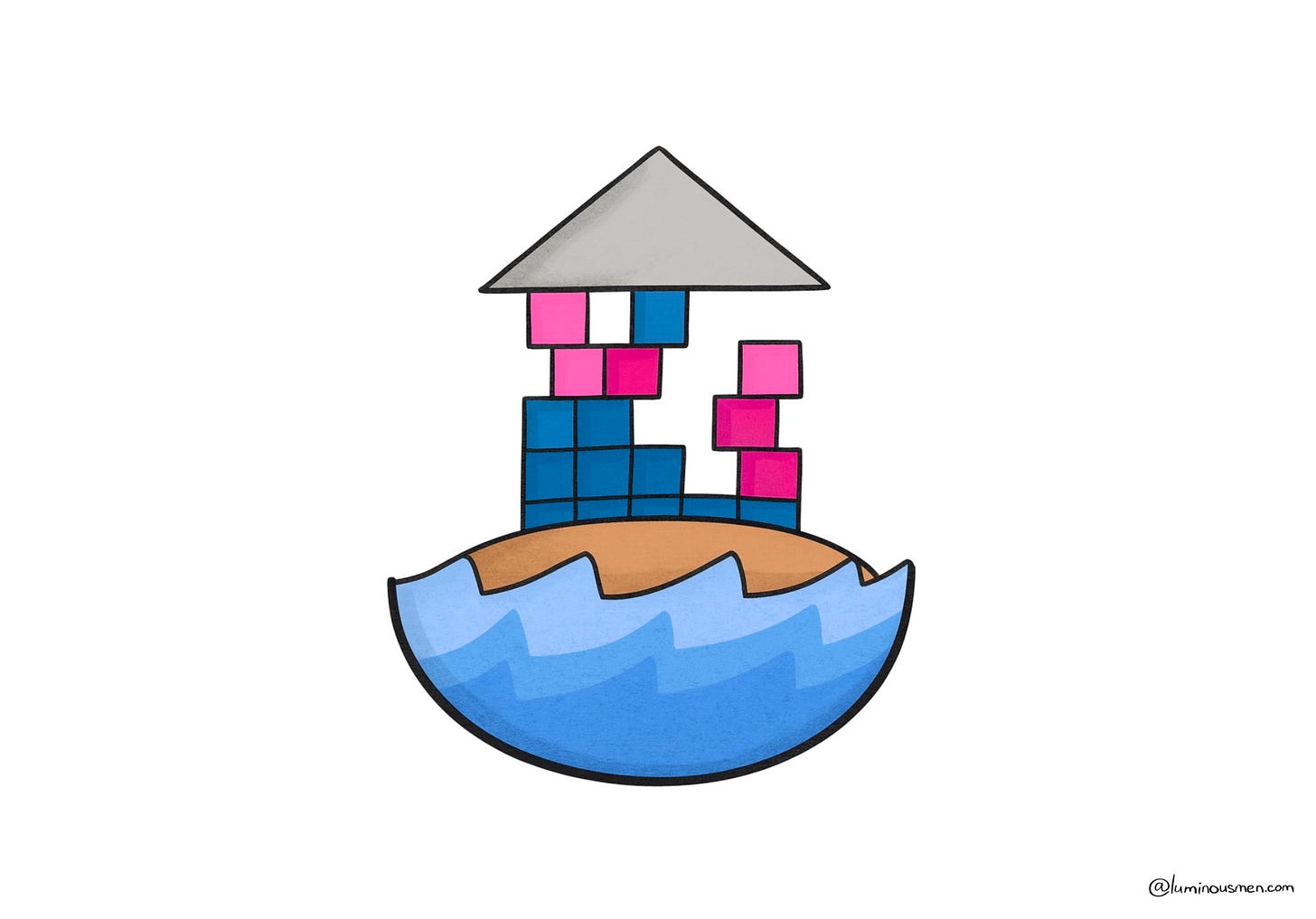

Your Lakehouse won't collapse under petabytes of data — it'll collapse under 5KB files.

Streaming and CDC generate thousands of them every minute. After a day, tens of thousands; after a month, millions. It is these files that are killing your system — inflating metadata, causing queries to choke when opening tiny files, and pushing your cloud service bill through the roof.

At some point, the only way out is to turn on something called compaction. What’s compaction? How does that solve our problem? In today’s blog post, we’re going to sort that out.

Small Files Problem?

Small files in a Lakehouse don't just show up out of nowhere. No one sits down and says "hmm, let's write a hundred thousand 5KB Parquet files, what can go wrong?" But that's exactly what ends up happening in practice.

Why though?

Streaming ingestion

You use Spark Structured Streaming or Flink to pipe data from Kafka into your Lakehouse. These pipelines usually run as micro-batches — one or two seconds of data at a time. Each micro-batch creates a new file. And because there isn’t much data in a one-second slice of Kafka, each of those files ends up tiny. And after a day, congrats — you now own a collection of 86,000 parquet shards.

CDC (Change Data Capture)

Change Data Capture (CDC) tools — like Debezium or AWS DMS — stream out row-level changes from databases such as PostgreSQL or MySQL. Instead of bulk dumps, they emit a steady flow of inserts, updates, and deletes as they happen in the source system.

On the Lakehouse side, each of those incremental batches gets written into storage. If your database is generating updates every second, and your ingestion pipeline (Spark, Flink, etc) writes each batch to a new Parquet file, you quickly end up with thousands of tiny files. The problem isn’t the data itself — a single row update is small by nature — it’s the fact that every one of those rows ends up wrapped in a full Parquet container with all the overhead that comes with it.

And query engines don’t magically merge those files on read. Spark, Presto, Athena — they all have to open each file separately, validate the schema, and deserialize it. Scanning ten big Parquet files is fast; scanning ten thousand micro-files is painful. The bottleneck isn’t the volume of data, it’s the I/O and metadata overhead created by so many individual objects.

Concurrency

Multiple writers targeting the same table at the same time can be another source of tiny files. A typical scenario: you have a streaming job continuously appending micro-batches while some batch process is running updates or inserts concurrently. Each writer produces its own set of files. On their own, those batches might not look too bad — but together they multiply, and the table ends up littered with far more fragments than either workload would have created alone.

In formats like Delta or Iceberg, the pain goes beyond the files themselves. Every file also becomes an entry in the transaction log or manifest. As the file count climbs, those logs balloon, and even planning a query can start to feel slow. At that point, performance problems aren’t coming from the size of your data, but from the sheer number of file and metadata objects the engine has to juggle.

Wrong Partition strategy

Sometimes the mess isn't caused by streaming data or CDC, but by the user themselves. A bad partitioning scheme can wreck a table faster than any CDC pipeline.